This tutorial was run as a workshop at MAT/CNSI, UCSB, September 2 2010. Photo courtesy of Ritesh Lala (thanks!)

Tutorial: An Agent-based world of Chemotaxis

This tutorial constructs a world in which simple organism-agents are attracted to high sugar concentrations (a form of chemotaxis). It is a form of bio-inspired agent-based modeling using Max/MSP/Jitter. An agent-based model (also sometimes related to the term multi-agent system) is a class of computational models for simulating the actions and interactions of autonomous agents (both individual or collective entities such as organizations or groups) within an environment. A bio-inspired model of an agent might include some of the following features:

Creating a population of such agents in Max/MSP/Jitter is possible, and the cosm objects can make it much easier. Since agents typically share structure, varying only in local state or configuration differences, we can model a single agent using a 'subpatcher' (an embeddable sub-document), and embed many such agents within a parent patcher. The enviornment however is shared between all agents, and should be modeled in the parent patcher. With this plan, we can refine the diagram with hints regarding wich max/cosm objects can be used to implement each agent feature:

create a world

The easiest way to get started with Cosm is to create a new patcher (Max document) from one of the templates: File->New From Template->cosm->cosm_world. Save this patcher somewhere on your system. Rendering can be turned on and off with the spacebar (or the toggle button at the top left).

put an AGENT in the world

Create a new patcher to put the agent in; save it next to your world patcher, and call it 'agent'. Create a new object in this patcher ('n' key) and type jit.gl.plato cosm into the box. This should create a new shape in the cosm window. Note: any of the Jitter ob3d objects can be used in place of jit.gl.gridshape: jit.gl.plato, jit.gl.videoplane, jit.gl.mesh, etc.

Create a new object [cosm.nav] and connect its first outlet to the jit.gl.plato's first inlet. Cosm.nav can manage the position and orientation of the object(s) it is connected to, and can also report collision detection with other cosm.nav objects.

Give the agent a motor

For the agent to change over time, it needs to receive some kind of trigger that time is passing. In Max, the simplest form of trigger is a message, and the simplest message is (bang). A bang is an undifferentiated, unqualified event, which most objects interpret as a command to do whatever it is that they do. The cosm.nav object interprets a (bang) message to mean 'update the position & orientation according to current spatial and angular velocity'.

In Cosm, on every frame, a bang is sent to the 'step' name, so any receive objects with that name ([receive step], or [r step] for short) will output the same (bang) accordingly. Create a [r step] object and hook it up to the cosm.nav. Turn rendering on (spacebar) and send (turn 0 10 0) to the cosm.nav, and you should see the geometric object turn by 10 degrees around its Y axis each frame. The (turn) message sets the angular velocity of the cosm.nav, and its arguments are azimuth, elevation and bank components (in degrees). Here are all the navigation messages a cosm.nav understands:

| message | meaning | arguments | |||

|---|---|---|---|---|---|

| position | set position in world coordinates | x | y | z | |

| rotate | set absolute orientation in world reference frame; axis/angle format | angle (degrees) | x-axis component | y-axis component | z-axis component |

| quat | set absolute orientation in world refernce frame; quaternion format | w / real | x / i | y / j | z / k |

| move | set velocity in object-local reference frame | x | y | z | |

| turn | set angular velocity in object-local reference frame | heading / azimuth / yaw (degrees) | attitude / elevation / pitch (degrees) | tilt / bank / roll (degrees) | |

| halt | stop moving and turning; equivalent to (move 0 0 0) and (turn 0 0 0). | ||||

| home | return to origin. equivalent to (position 0 0 0) | ||||

For example:

To make the agent move, send a (move 0 0 0.1) message. This sets the spatial velocity of the object, in the X, Y, Z object-local axes, in OpenGL spatial units per frame. By default, we assume that an object's forward vector lies in its positive Z axis, hence the we give the third argument of (move) a positive value. If the object has flown out of view, you can bring it back by setting its position directly. The message (position 0 0 0) will put it back into the origin. The (position) message is in world-global coordinates, not object-local like (move). Note: The Cosm world is toroidal: leaving one edge you will reappear on the opposite edge.

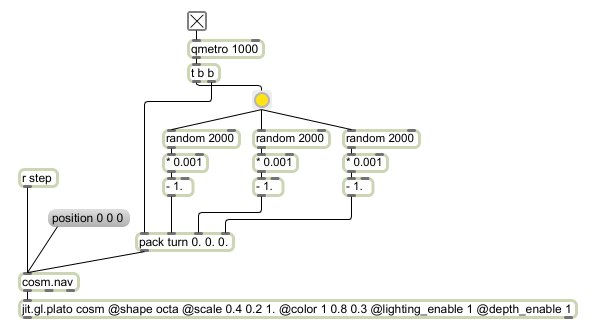

We can make the movements more interesting by using some randomization to the rotation:

The agent should look something like this:

In subsequent screenshots the random turn will be encapsulated into a subpatcher [p random_rotation].

Sonify the agent

The same messages sent to the jit.gl.* object can also be sent to cosm.source~, for spatialized sonification.

Here the cosm.audio~ receives a monophonic audio signal (in this case, a square wave), and position/orientation messages from cosm.nav. It uses this data to modify the signal for distance filtering, and produce eye-space (or rather, ear-space) polar coordinates of the object (azimuth/elevation). These coordinates, and the filtered signal, are then encoded into an Ambisonic representation (spherical harmonics) by the cosm.ambi.decode~ object, and the harmonic signals sent to an Ambisonic decoder via the named sends W, X, Y and Z. The ambisonic decoder (not shown; it is inside the [p audio output] subpatcher from the cosm_world template) converts these Ambisonic domain signals into appropriate signals for each loudspeaker. Any number of loudspeakers can be configured, but the default is two at azimuth +/- 45 degrees (front left, front right).

In subsequent screenshots the cosm.audio~ section will be encapsulated into a subpatcher [p spatialize].

Make many of the agents

Several agents can be created by making instances of the Agent patcher. You might need to add [loadbang] objects to initialize state, turn the qmetro on, etc. A more advanced method to create many agents could make use of the [poly~] object.

Create a field

Fields in Cosm are modeled using 3D matrices, as voxel grids. The field is shared by all agents, so it should be created in the world patcher, not the agent patcher. In this example, the field matrix has been named 'sugar':

Pressing the button will fill the field with noise, via jit.gl.noise.

The simplest way of showing a field currently is using jit.gl.isosurface. It shows a surface of equal intensity accross the field, just like a contour line shows positions of equal height on a map. The isosurface corresponds to one contour line. Where a contour line reduces a 2D field to a 1D line (or lines), an isosurface reduces a 3D field to a 2D surface (or surfaces). We can show the surface at which the field has a value of 0.5 by sending the (isolevel 0.5) message to jit.gl.isosurface. Regions outside of this surface have a value greater than 0.5, and regions on the inside of it have a value less than 0.5.

The scale and position parameters align the isosurface output with the coordinate frame of our world (which ranges from +/- 32 units). The output should look something like this:

Navigate the world

If you have a logitech joystick or a space navigator plugged in, you can navigate the world using them (they should work automatically). Otherwise, you can use the keyboard bindings:

| Translate (move) | Rotate | |||

|---|---|---|---|---|

| increase | decrease | increase | decrease | |

| X-axis (side) | . | , | w | x |

| Y-axis (up) | ' | / | right arrow | left arrow |

| Z-axis (forward) | up arrow | down arrow | d | a |

| Reset | ~ | |||

If you have a different kind of joystick, you should be able to use it with a bit of extra work: look at the cosm.navigation patcher in /cosm_abstractions to see where you might start.

Diffuse the field

A real world is dynamic, temperatures flow, chemical diffuse... We can add diffusion to our world with the [cosm.diffuse] object. To do so, we trigger the field every frame with another [r step], transform it through a [cosm.diffuse], and feed it back into itself once more by the use of named matrices (the standard method of matrix feedback in Jitter). We can also add a decay component by multiplying by a number less than 1 in the feedback loop (using jit.op). Finally we also add a [jit.clip] to keep make sure that the field values are always between 0 and 2:

Now the field will smoothen out and eventually disappear (as it fades to zero); pressing the button above [jit.gl.noise] will re-seed the field again:

Let the agent sense the field

Fields can be 'sampled' at any world coordinate (the internal matrix is indexed with 3D linear interpolation), giving the ability of mobile points such as our agents to detect the local field intensity at their current position. The [cosm.field.query] object has a named attribute @field which refers to a Jitter matrix to sample. When cosm.field.query receives a (position x y z) message, it indexes the named field according to the x,y,z position. Since cosm.nav outputs position messages on every frame, we can hook them up like this (in the Agent patcher):

The agent can detect the field, but as yet does not respond to it. For now, let's make the agents change appearance (and sound) according to local intensity:

Create a second kind of agent TO modify the field

Now, instead of having the field be filled with randomness, we can instead introduce a second kind of agent whose role is to add sugar to the world. We can begin with our current agent, and modify the appearance, mode of movement, and sound; then save it as a different name, such as 'source'. To add data into the field at a particular position, we use [cosm.field.query] again, but this time we send it a message (add v) where v is the amount of 'stuff' we want to add to the field, at the position sent by the cosm.nav. As with reading, writing to the field uses 3D interpolation to distribute the value across neighboring voxels.

Add the 'source' patcher object to the world, and the output should start to look like this:

Notice how the field isosurface now envelops and follows the blue sources. Also notice how the one agent to get close to a source has turned from green to red.

Connect A sensor to A motor

Finally we are ready to attempt to construct chemotaxis. Crucially, our agents can sense the sugar concentration in their local neighborhood, but they can't see anything else; they can potentially control their velocity and angular velocity, but how do they know which way to go?

We can take a leaf out of nature's book. E. Coli manages to follow concentration gradients to glucose heaven by modulating two different kinds of movements: swimming, and tumbling. When swimming, they move more or less straight ahead; when tumbling, they change direction randomly. They also have the capacity of memory - they can effectively measure whether the concentration is rising or falling over time. We can replicate that in our agents by comparing current and previous concentration using a delta function, and switching between two different motile modes accordingly. Roughly speaking, if things are improving, keep going forward; if not, start tumbling.

We can do this in Max with a simple bit of basic patching, making use of the right-to-left outlet firing order that Max objects usually use:

When the sugar delta is negative, concentration is falling so tumbling behavior should begin:

The [< 0] object detects this (returning a 1 when negative, 0 when positive). This is routed back to the top of the patcher, where [sel 1] triggers a bang from its left outlet when sugar is decreasing, and the right outlet when it is increasing. The decreasing case now triggers the random rotation (instead of a qmetro). It may be necessary to make the rotations more extreme than before, for the propoer 'tumbling' behaviors. The increasing case (right outlet) simply sends the (turn 0 0 0) message to switch into a straight ahead movement.

The effect is quite dramatic - the agents have 'found' the sugar sources quite quickly, and stay close to them. The agent patch was also modified to use the current mode (swimming or tumbling) to change color (green or red), rather than local concentration:

The final patches: chemotaxis.zip